Podcast – AWS in Orbit: Space Data and Resiliency

We’re going to be talking to Hawkeye 360, Effectual and AWS Aerospace and Satellite about the power of partnerships in the space industry to ensure

Migrating legacy workloads is your gateway to digital transformation

Migrating legacy workloads to the cloud is the best starting point toward modernization, but it can be an intimidating step to take when your organization’s

Leveraging the Power of Data Modernization in Financial Services: Block’s Transformation Journey

To stay competitive in the dynamic world of financial technology and services, Block Inc. sought to take a bold leap forward with its data and

Data & Analytics Platforms: A Tale of Two Paths – The Right Path is the One You Commit To

Cloud modernization and, more specifically, data analytics in the cloud, can be an exercise in difficult decisions and ultimately taking a stance. When navigating the

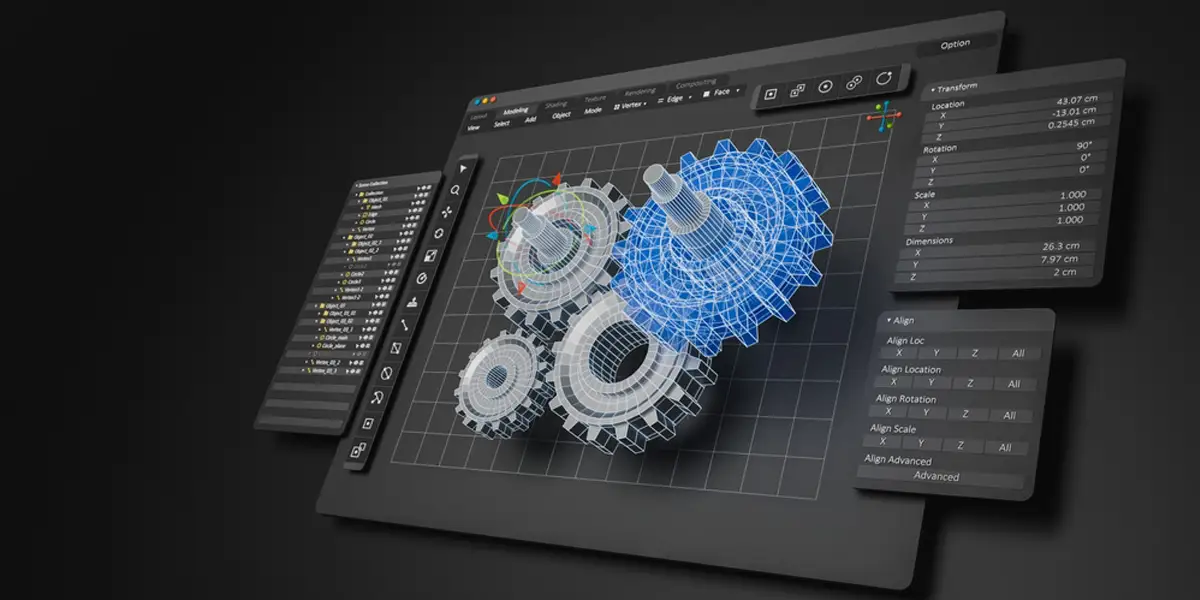

Leveraging the Power of Siemens NX for CAD on AWS AppStream

Traditional CAD design is notoriously resource-intensive. While this isn’t an issue for powerful engineering workstations, it is a problem for remote engineers and distributed teams

Effectual Achieves the new AWS Advertising and Marketing Technology Competency

Effectual, a cloud service provider, announced today that it has achieved the Amazon Web Services (AWS) Advertising and Marketing Technology Competency in the category of

Trading at the Speed of Now: How Kubernetes Powers Real-Time Insights & Rapid Agility in Financial Markets

In the fast-paced world of financial trading — where microseconds can make or break a deal — the reliance on legacy application architecture poses a

Effectual Recognized as HashiCorp Rising Star Partner of the Year

Effectual, a modern, cloud-first managed and professional services company, has been named the 2023 HashiCorp Rising Star Partner of the Year.

Charting the Future of Investment Research: Generative AI’s Transformative Path

The integration of generative artificial intelligence (Generative AI) into financial services is reshaping the industry. Discover how financial organizations can position themselves at the forefront

Effectual Earns HashiCorp Security Competency

Validating Their HashiCorp Expertise, Effectual now holds the HashiCorp Infrastructure and Security Competencies