News and Blog

The latest Effectual news, updates, and articles.

Migrating legacy workloads is your gateway to digital transformation

Migrating legacy workloads to the cloud is the best starting point toward modernization, but it can be an intimidating step to take when your organization's success depends on the availability

Leveraging the Power of Data Modernization in Financial Services: Block’s Transformation Journey

To stay competitive in the dynamic world of financial technology and services, Block Inc. sought to take a bold leap forward with its data and analytics. In early 2022, the

How To Future-Proof Your VMware Investment

VMware is a clear leader in the virtualization market, controlling roughly 45% of the virtualization software market and representing over 150,000 domains. However, VMware and its technology offerings are undergoing

Platform Engineering for the Financial Services Industry

A pattern is emerging for financial services and technology companies in the adoption of Platform Engineering as a foundational approach to create self-service infrastructure services; enabling application teams to more

VMware Cloud on AWS: A Catalyst for IT Modernization

Realizing that they no longer need to be solely reliant on physical data centers, enterprises are accelerating their adoption of cloud-based solutions and native cloud services. While there might be

Data & Analytics Platforms: A Tale of Two Paths – The Right Path is the One You Commit To

Cloud modernization and, more specifically, data analytics in the cloud, can be an exercise in difficult decisions and ultimately taking a stance. When navigating the landscape of data and analytics

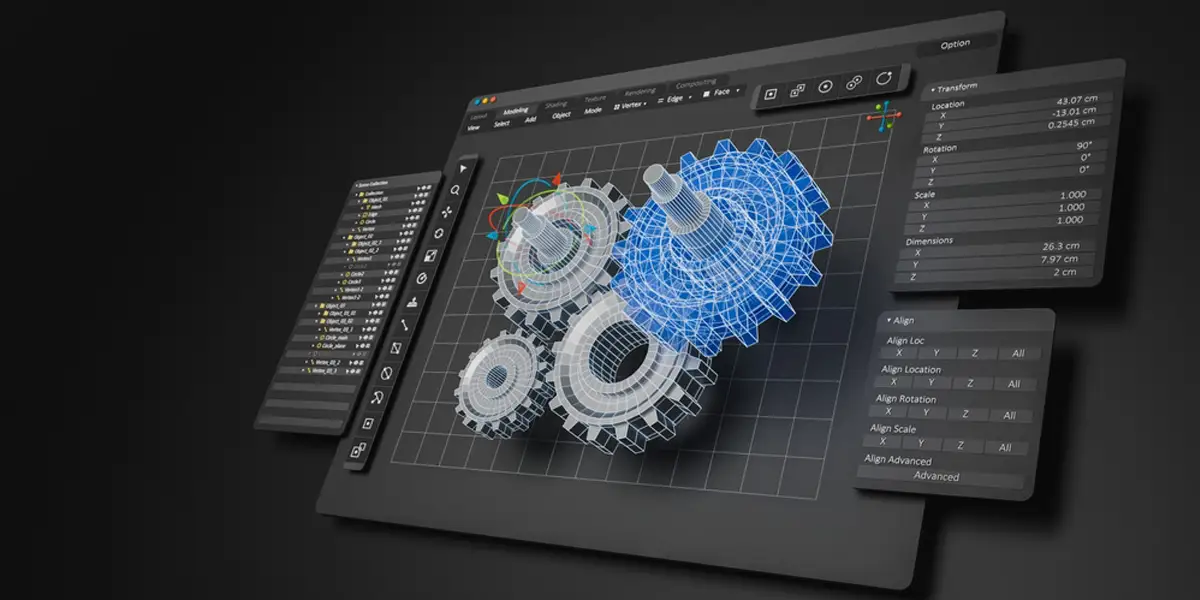

Leveraging the Power of Siemens NX for CAD on AWS AppStream

Traditional CAD design is notoriously resource-intensive. While this isn’t an issue for powerful engineering workstations, it is a problem for remote engineers and distributed teams whose work extends far beyond

Effectual Achieves the new AWS Advertising and Marketing Technology Competency

Effectual, a cloud service provider, announced today that it has achieved the Amazon Web Services (AWS) Advertising and Marketing Technology Competency in the category of…

Trading at the Speed of Now: How Kubernetes Powers Real-Time Insights & Rapid Agility in Financial Markets

In the fast-paced world of financial trading — where microseconds can make or break a deal — the reliance on legacy application architecture poses a…

Effectual Recognized as HashiCorp Rising Star Partner of the Year

Effectual, a modern, cloud-first managed and professional services company, has been named the 2023 HashiCorp Rising Star Partner of the Year.

Effectual Named a Global Leader in Cloud Computing for Three Consecutive Years

Earning the Stratus Award for Cloud Computing in 2021, 2022, and once again in 2023, Effectual continues to drive innovation in the cloud and develop…

Charting the Future of Investment Research: Generative AI’s Transformative Path

The integration of generative artificial intelligence (Generative AI) into financial services is reshaping the industry. Discover how financial organizations can position themselves at the forefront…

Effectual Earns HashiCorp Security Competency

Validating Their HashiCorp Expertise, Effectual now holds the HashiCorp Infrastructure and Security Competencies

Advancing Data Security Management with the AWS Landing Zone Accelerator

As the adoption of new cloud computing technologies accelerates, there is an increased focus on the ethical responsibility of data privacy and protection. Securing sensitive information in data-compliant cloud environments

Navigating the Evolving Data Landscape: Optimizing Cost and Performance of the Modern Data Stack with dbt Labs and Databricks

Together, dbt and Databricks Yield Massive Cost Savings and Speed when Building Data Pipelines

Unleash the Power of Data and Analytics for Financial Services: Featuring Block’s Transformation Journey

Effectual and AWS recently hosted a live webinar focusing on technological advancements in data, analytics, AI, ML operations, and migration best practices that can benefit…

Reimagine Cloud-Based Data Processing and Optimize Costs with AWS Glue and Snowflake

Snowflake has made a significant impact on the enterprise data landscape with its groundbreaking data warehouse solution. As organizations continue to transition from traditional on-premises solutions to modern, cloud-based platforms,

Effectual Achieves SOC 2 Type 1 and Type 2 Compliance, Demonstrating Commitment to Data Security and Privacy

JERSEY CITY, N.J.; June 7, 2023 – Effectual, an innovative cloud service provider, has achieved System and Organization Controls (SOC) 2 Type 1 and Type 2 compliance following an independent audit

Unlock the transformative potential of the cloud

Migrating legacy workloads is your gateway to digital transformation

Migrating legacy workloads to the cloud is the best starting point toward modernization, but it can be an intimidating step to take when your organization’s

Leveraging the Power of Data Modernization in Financial Services: Block’s Transformation Journey

To stay competitive in the dynamic world of financial technology and services, Block Inc. sought to take a bold leap forward with its data and

How To Future-Proof Your VMware Investment

VMware is a clear leader in the virtualization market, controlling roughly 45% of the virtualization software market and representing over 150,000 domains. However, VMware and

Platform Engineering for the Financial Services Industry

A pattern is emerging for financial services and technology companies in the adoption of Platform Engineering as a foundational approach to create self-service infrastructure services;

VMware Cloud on AWS: A Catalyst for IT Modernization

Realizing that they no longer need to be solely reliant on physical data centers, enterprises are accelerating their adoption of cloud-based solutions and native cloud

Data & Analytics Platforms: A Tale of Two Paths – The Right Path is the One You Commit To

Cloud modernization and, more specifically, data analytics in the cloud, can be an exercise in difficult decisions and ultimately taking a stance. When navigating the

Leveraging the Power of Siemens NX for CAD on AWS AppStream

Traditional CAD design is notoriously resource-intensive. While this isn’t an issue for powerful engineering workstations, it is a problem for remote engineers and distributed teams

Effectual Achieves the new AWS Advertising and Marketing Technology Competency

Effectual, a cloud service provider, announced today that it has achieved the Amazon Web Services (AWS) Advertising and Marketing Technology Competency in the category of

Trading at the Speed of Now: How Kubernetes Powers Real-Time Insights & Rapid Agility in Financial Markets

In the fast-paced world of financial trading — where microseconds can make or break a deal — the reliance on legacy application architecture poses a

Effectual Recognized as HashiCorp Rising Star Partner of the Year

Effectual, a modern, cloud-first managed and professional services company, has been named the 2023 HashiCorp Rising Star Partner of the Year.

Effectual Named a Global Leader in Cloud Computing for Three Consecutive Years

Earning the Stratus Award for Cloud Computing in 2021, 2022, and once again in 2023, Effectual continues to drive innovation in the cloud and develop

Charting the Future of Investment Research: Generative AI’s Transformative Path

The integration of generative artificial intelligence (Generative AI) into financial services is reshaping the industry. Discover how financial organizations can position themselves at the forefront